Azure Private Endpoints: Deployment Scenarios Explained

Compare single-region, hub-and-spoke, hybrid and multi-region private endpoint deployments with practical DNS, traffic inspection and cost-management guidance.

Azure Private Endpoints let you securely connect to Azure services using private IPs from your virtual network, bypassing the public internet. This reduces exposure to threats and supports compliance needs. Deployment methods like single-region setups, hub-and-spoke models, hybrid clouds, and multi-region designs impact cost, security, and complexity. Key considerations include DNS configuration, traffic routing, and cost management. Here’s what you need to know:

- Single-region setups are simple but offer limited redundancy.

- Hub-and-spoke models centralise private DNS zones, simplifying management for multiple networks.

- Hybrid configurations connect on-premises systems to Azure services using DNS forwarders or Azure Private Resolver.

- Multi-region designs improve disaster recovery but increase costs and complexity.

Careful planning ensures your deployment meets your needs for security, scalability, and cost-efficiency. Proper DNS setup, traffic inspection with Azure Firewall, and optimising storage account endpoints are crucial for smooth implementation. Prioritising early architectural decisions avoids costly adjustments later.

Azure Private Endpoints and DNS Private Zones | Full Demo

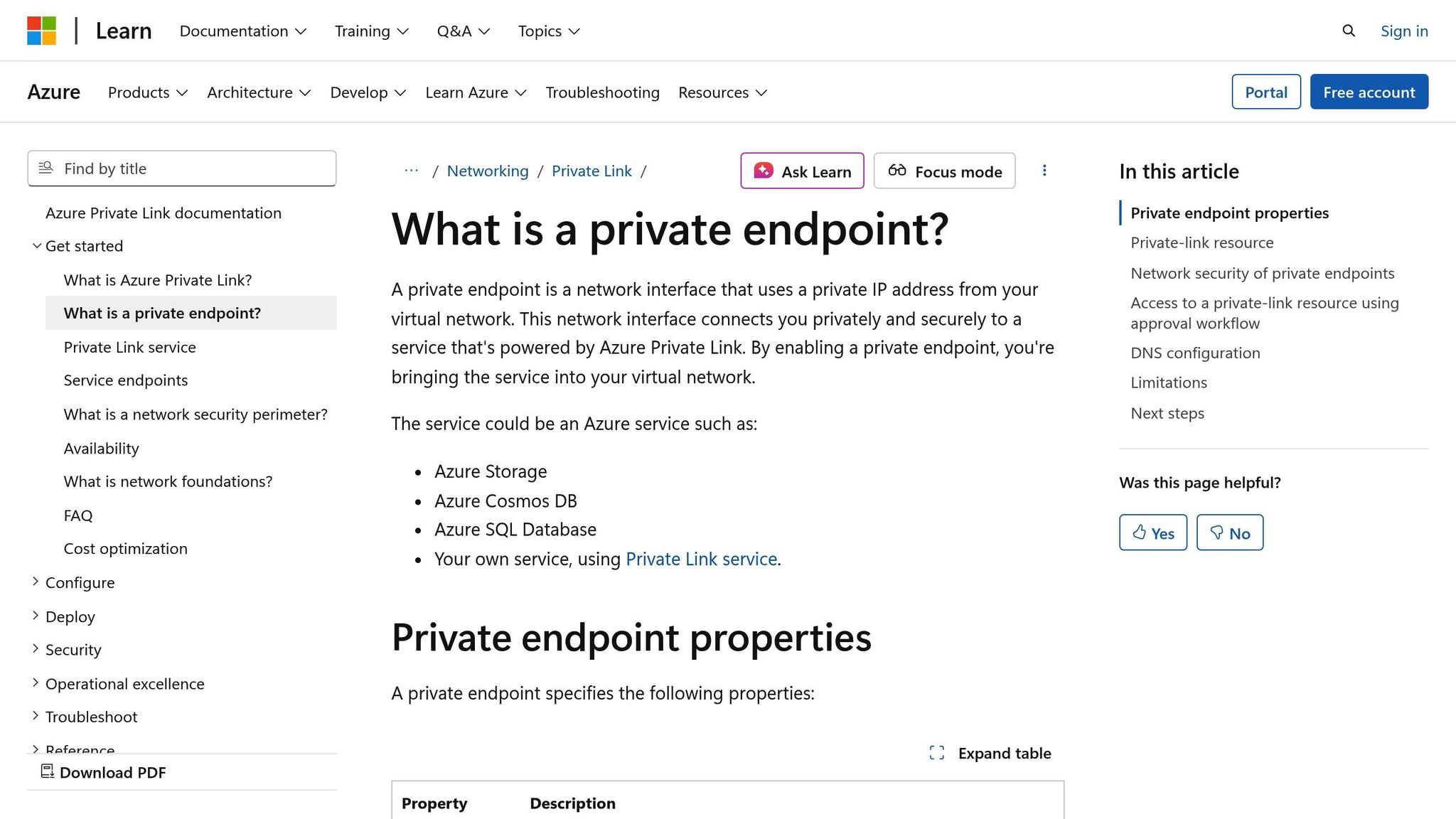

What Are Azure Private Endpoints?

Azure Private Endpoints let you connect securely to Azure services using a private IP address from your virtual network, completely bypassing the public internet. Instead of accessing a service via a public DNS address, you use a private IP (e.g., 10.0.1.5), ensuring your connection remains private and secure.

The technology behind this is Azure Private Link, which creates a private connection from your virtual network to Azure services. When you set up a private endpoint, the public service is mapped to a private IP within your network. This keeps all communication encrypted and confined within Microsoft's network infrastructure, providing an extra layer of security.

This setup is particularly useful for organisations handling sensitive data, like customer information or financial records, as it ensures all traffic stays within controlled boundaries, enhancing compliance and data protection.

How Private Endpoints Function

For a private endpoint to work, several components need to come together. It must be deployed within a specific subnet, which can host other resources as well. Additionally, a private DNS zone is configured to map the service’s fully qualified domain name (FQDN) to the endpoint’s private IP address. The endpoint is then securely linked to the Azure service through a Private Link connection.

DNS resolution plays a key role here. Azure's DNS service (168.63.129.16) intercepts client queries for the service's FQDN and resolves them to the private IP instead of the public one. For workloads within the virtual network that don't use custom DNS servers, this process happens automatically once the private DNS zone is linked. For on-premises setups, a DNS forwarder or Azure Private Resolver must be connected to the private DNS zone to ensure proper resolution.

Azure services often have multiple sub-resources, and choosing the correct one is essential. For example, Azure Storage accounts require separate private endpoints for each service - Blobs, Data Lake Storage, Files, Queues, Tables, or Static Websites. If you create a private endpoint for Blob Storage but not for Data Lake Storage, any operation needing a DFS private endpoint will fail.

For Azure App Service, private endpoints are configured separately for each deployment slot, with support for up to 100 private endpoints per slot. If you're not using Azure DNS private zone groups, you'll also need to create an additional DNS record pointing to the private endpoint IP for the Kudu/SCM endpoint.

Private endpoint connections require approval. When a connection is created, it enters a "Pending" state until the Private Link resource owner approves it. The owner can then mark the connection as "Approved", "Rejected", or "Disconnected", giving them complete control over access permissions.

These technical details form the foundation for the deployment strategies discussed in the next section.

Why Deployment Patterns Matter

Understanding how private endpoints work helps you tailor deployment strategies to your specific needs, whether they’re focused on security, cost efficiency, or redundancy.

In hub-and-spoke architectures, private DNS zones are centralised in a hub network, allowing multiple spoke networks to share a single zone. This simplifies DNS management and reduces overhead. However, each DNS zone group supports up to five DNS zones, and you can’t add multiple DNS zone groups to a single private endpoint.

Hybrid cloud setups, which combine on-premises infrastructure with Azure services, use private endpoints alongside DNS forwarders or Azure Private Resolver. Conditional forwarding to public DNS zones (like database.windows.net) is required instead of forwarding to the privatelink zone.

Multi-region deployments offer better availability and disaster recovery. You can deploy private endpoints in a region different from the target Azure resource, maintaining connectivity even during regional outages. However, this approach may increase latency and incur cross-region traffic costs.

Traffic inspection needs also shape deployment patterns. In hub-and-spoke models with dedicated virtual networks for private endpoints, traffic can be routed through Azure Firewall for logging and inspection. If private endpoints and virtual machines share a virtual network, each virtual machine needs /32 system routes pointing to each private endpoint, with a route configured to direct traffic through Azure Firewall.

Choosing the right deployment strategy early on ensures your architecture aligns with your security needs, budget, and scalability plans. For smaller applications, a single-region setup might suffice, whereas a hub-and-spoke model is better suited for managing multiple applications across various networks.

Common Deployment Patterns for Private Endpoints

When deciding on a deployment pattern for private endpoints, factors like your organisation's scale, location, and connectivity needs play a crucial role. Each pattern comes with its own set of strengths and trade-offs, particularly in terms of complexity, cost, and resilience. Below, we explore some common deployment strategies tailored to different operational requirements.

Single-Region Setup

The single-region setup is the simplest deployment option, ideal for operations confined to a single region. It uses a single private DNS zone linked to the virtual network, allowing workloads to query the Azure-provided DNS service (168.63.129.16) for IP resolution. This approach keeps management straightforward and costs predictable by avoiding cross-region charges. However, its resilience is limited - regional outages can disrupt operations. This setup is less suitable for mission-critical applications or organisations planning to expand geographically or integrate with on-premises infrastructure, as it lacks the flexibility and fault tolerance of more advanced configurations.

Hub-and-Spoke Architecture with Shared Endpoints

The hub-and-spoke architecture centralises private endpoints within a hub network, enabling multiple spoke networks to share a single private DNS zone. This design simplifies DNS management and reduces the risk of configuration errors. It also helps lower virtual network peering costs compared to full-mesh topologies. However, there are limitations: each DNS zone group supports up to five DNS zones, and a private endpoint cannot belong to multiple DNS zone groups - careful planning is essential to avoid complications. For small and medium-sized businesses scaling their Azure infrastructure, this pattern strikes a balance between simplicity and flexibility.

Hybrid Cloud Configuration

Hybrid cloud setups bridge on-premises systems with Azure services, allowing on-premises workloads to access private endpoints. To achieve this, DNS resolution must be extended from the on-premises environment to Azure. A common approach involves deploying a DNS forwarder within the linked virtual network. Alternatively, Azure Private Resolver offers a fully managed, scalable solution that reduces operational overhead. Proper configuration of the forwarder is critical to prevent resolution issues. Before moving to production, thorough testing of DNS resolution from on-premises clients is essential to ensure seamless connectivity.

Multi-Region Disaster Recovery

Multi-region deployments provide enhanced availability and disaster recovery by maintaining private endpoint connectivity even during regional outages. Private endpoints can be deployed in a region different from the target Azure resource, offering greater architectural flexibility. Organisations can choose between a shared DNS zone - which may slow failover during updates - or regional DNS zones, which offer faster local recovery at the cost of added complexity. It's important to weigh potential latency and data transfer costs against the organisation’s recovery time objectives and the criticality of its applications. This setup is particularly valuable for businesses prioritising high availability and robust disaster recovery capabilities.

Advanced Configurations and Implementation Tips

Once you've set up the basic deployment for your private endpoints, there are several advanced configurations that can refine security, improve DNS management, and ensure seamless access across Azure storage services. These tips are especially useful for more complex setups.

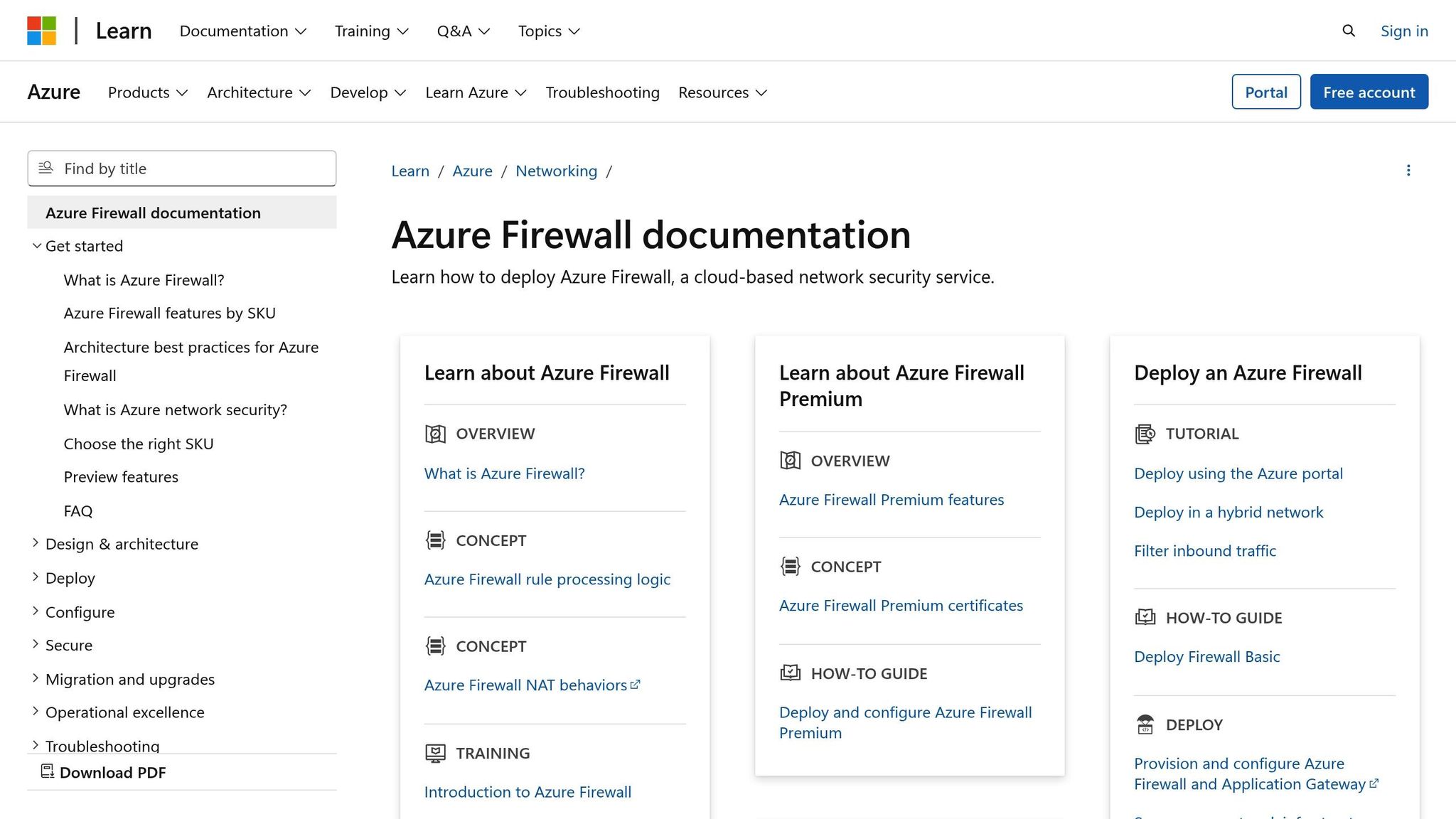

Traffic Inspection with Azure Firewall

If your security policies require inspecting traffic directed to services through private endpoints, Azure Firewall is an effective solution, particularly in hub-and-spoke architectures. There are two main ways to implement this inspection, depending on your specific needs.

The dedicated virtual network approach involves setting up a separate virtual network solely for private endpoints. This design is ideal for managing connections to multiple Azure services, as it simplifies route management and avoids hitting the 400-route limit. It’s particularly useful for larger deployments where scalability and streamlined management are priorities.

On the other hand, the shared virtual network scenario places both private endpoints and virtual machines within the same network. In this setup, virtual machines use /32 routes for each private endpoint, with all traffic routed through Azure Firewall. This works well in situations where creating a dedicated virtual network isn't practical or when only a few services are involved. While this method requires more detailed route management, it’s a solid option for smaller deployments or environments with tight virtual network constraints.

For organisations with on-premises workloads that need traffic inspection, Azure Firewall can also be deployed following the shared virtual network model. This eliminates the costs associated with virtual network peering, which can be a significant saving compared to hub-and-spoke models. However, Azure Firewall’s compute and throughput costs should be considered. For high traffic volumes and stringent security needs, this investment is usually worthwhile. Smaller setups, however, might explore alternatives like network security groups or application-level controls.

Storage Account Private Endpoints

Each Azure Storage service - Blobs, Data Lake, Files, Queues, Tables, and Static Websites - requires its own private endpoint. This means careful planning is key to avoid configuration issues.

One best practice is to create private endpoints for both Data Lake Storage and Blob Storage at the same time, even if you think you’ll only need one initially. Why? Because operations targeting the Data Lake Storage endpoint might redirect to the Blob endpoint, and certain actions like managing access control lists (ACLs) or creating directories specifically require the Data Lake Storage endpoint. Without both endpoints, these operations will fail due to the underlying architecture of Azure Storage services.

This dual-endpoint setup ensures uninterrupted operations and saves you from potential headaches later on. For organisations managing multiple storage accounts, this approach becomes even more critical, making early planning essential to avoid gaps in configuration.

DNS Configuration Tips

Proper DNS configuration is essential for private endpoint deployments, particularly in hybrid and hub-and-spoke setups. To enable on-premises workloads to resolve the fully qualified domain name (FQDN) of a private endpoint, you’ll need a DNS forwarder within the virtual network linked to the private DNS zone. This forwarder - often a virtual machine running DNS services or Azure Firewall - handles DNS queries from on-premises networks and forwards them to Azure DNS.

DNS queries for private endpoints must originate from the virtual network linked to the private DNS zone. A DNS forwarder ensures this by acting as a proxy for on-premises clients. When setting up conditional forwarding for custom DNS solutions, it’s best to point to the recommended public DNS zone forwarder (e.g., database.windows.net) rather than the privatelink zone. This avoids unnecessary complexity and ensures proper resolution.

For hybrid environments, Azure Private Resolver offers a streamlined alternative, forwarding requests for private endpoints to Azure DNS without needing traditional DNS forwarder VMs. This managed service reduces operational overhead and ensures consistent DNS management across on-premises and cloud workloads.

In hub-and-spoke architectures, a centralised approach to DNS zones works best. Create private DNS zones in the hub network and link them to all spoke networks, rather than duplicating zones in each spoke. This reduces administrative work and ensures consistent resolution. However, keep in mind that each DNS zone group supports up to five DNS zones, and you can’t add multiple DNS zone groups to a single private endpoint. Planning your DNS zone requirements early and consolidating zones where possible helps maintain consistency across the network.

For Azure App Service private endpoints, there are additional DNS considerations for the Kudu console and Kudu REST API, which are used for deployments with self-hosted agents. You’ll need a second DNS record pointing to the private endpoint IP address - one for the app itself and another for the source control management (SCM) component. Thankfully, Azure Private DNS Zone groups simplify this process by automatically adding the SCM endpoint record, reducing manual work and minimising errors.

When allocating subnets for private endpoints, there’s no need to dedicate the entire subnet - other resources can share it. This makes network design more efficient. However, the virtual network integration feature cannot use the same subnet as the private endpoint, so you’ll need to account for this when planning your architecture.

Planning and Cost Management

Thoughtful planning of Azure private endpoints ensures a balance between security, performance, and costs. This approach helps avoid expensive redesigns and supports scalable deployments.

Architecture Design and Resource Structure

How you design your architecture - specifically your subscription, resource group, and DNS zone structure - plays a big role in operational complexity and cost. A hub-and-spoke topology is often the best choice for managing multiple private endpoints, especially when working across teams or business units.

In this setup, a central hub subscription houses shared resources like private DNS zones, which are linked to multiple spoke subscriptions. This centralised approach offers several advantages:

- Simplifies management: Avoids duplicating DNS zones across virtual networks.

- Prevents routing issues: Helps you stay under Azure's 400-route limit per routing table, which is crucial for larger setups.

- Streamlines billing and access control: Teams can have separate subscriptions while sharing infrastructure.

For example, if you're deploying private endpoints for Azure SQL Database, you would create a single private DNS zone (e.g., privatelink.database.windows.net) in the hub subscription and link it to all spoke virtual networks. This avoids the complexity of managing duplicate zones and reduces DNS queries, leading to lower operational costs.

On the other hand, creating multiple zones with the same name for different virtual networks introduces manual operations to merge DNS records. This not only increases costs but also raises the risk of configuration errors. A centralised DNS zone ensures a single point of resolution for both virtual network and on-premises clients, particularly useful when using Azure Private Resolver for hybrid workloads.

Key considerations:

- Each DNS zone group supports up to five DNS zones, and you cannot add multiple DNS zone groups to a single private endpoint. Plan your DNS requirements early to maintain consistency.

- Plan storage service endpoints in advance to avoid extra charges.

- Use shared subnets where feasible, but remember that virtual network integration features cannot share subnets with private endpoints.

- For App Service, allocate endpoints carefully - each slot supports up to 100 private endpoints. For instance, assign endpoints per staging and production slots to keep costs predictable.

Automating the cleanup of unused private endpoints is another essential step. Even inactive endpoints incur monthly charges, so removing them can save money. Additionally, private endpoints can be deployed in different regions than the target resource, allowing you to optimise for both latency and cost.

Multi-Region Cost Analysis

When deploying across multiple regions, costs can vary significantly depending on data transfer and network design. A detailed cost analysis is essential to maintain efficiency.

Data transfer costs are a big factor in multi-region setups. While inbound data transfer to Azure is free, outbound transfers incur charges that vary by region. Deploying private endpoints in the same region as consuming resources can help minimise these costs. For example, if you create a private endpoint in Region 1 but the service is hosted in Region 2, inter-region data transfer costs will apply. These charges are generally higher than intra-region transfers.

Hub-and-spoke architectures can help consolidate traffic patterns, reducing unnecessary cross-region data flows. Tools like Azure Firewall can also monitor and potentially reduce these flows, though additional routing complexity needs to be considered.

In disaster recovery scenarios, weigh the cost of maintaining standby capacity in secondary regions against the cost of failover and data transfer. Your decision should align with your recovery time objective (RTO) and recovery point objective (RPO).

Virtual network design also impacts costs:

- Dedicated virtual networks for private endpoints reduce route complexity and administrative overhead. Instead of creating individual /32 routes for each endpoint, you can set up a single route pointing to the network address space. However, this requires additional resources and may involve peering charges.

- Shared virtual networks, where private endpoints and virtual machines coexist, can save on infrastructure costs. However, this increases route management complexity as each private endpoint requires a /32 system route. For on-premises traffic, this option avoids virtual network peering charges, making it cost-effective for hybrid deployments.

DNS forwarders add another layer of cost in hybrid cloud setups. To enable on-premises workloads to resolve private endpoint FQDNs, you’ll need a DNS forwarder in the virtual network linked to the private DNS zone. This could be a virtual machine running DNS services or a managed service like Azure Firewall. Costs include compute charges for the DNS forwarder VM or Azure Firewall instance, data transfer costs for DNS queries, and operational expenses for managing the forwarder. Alternatively, using Azure Private Resolver can simplify management as it’s a managed service, though it comes with separate charges.

For organisations looking to optimise their Azure spending while maintaining robust private endpoint deployments, resources like Azure Optimization Tips, Costs & Best Practices offer tailored strategies. These include setting up automated cost alerts to prevent unexpected charges, adopting a Zero Trust security model for enhanced connectivity, and reviewing budgets monthly to stay on track.

Regular monitoring of Azure usage and performance is critical for cost control. Benchmark workloads to identify areas for improvement, which can lead to savings. Strategies like optimising network paths can reduce latency and associated data transfer costs. Additionally, ensure Network Security Groups are correctly configured to maintain secure virtual network peering and reduce security risks.

Conclusion

Deploying Azure Private Endpoints effectively requires aligning your architecture with your organisation’s priorities - balancing cost, security, and operational needs. Whether you opt for a single-region setup, a hub-and-spoke model, a hybrid cloud approach, or a multi-region design, the choice should reflect your geographic footprint, compliance obligations, and how much complexity your team can manage.

A well-thought-out DNS configuration is essential for reliable FQDN resolution. Using a centralised private DNS zone in a hub topology can simplify management by eliminating redundant infrastructure. For hybrid setups, DNS forwarders or Azure Private Resolver can ensure seamless access to Azure services from on-premises workloads.

Once your deployment pattern is in place, cost management becomes a top priority. Your architecture should strike a balance between scalability and operational efficiency. For example, hub-and-spoke models can simplify DNS management and avoid route limits, while single-region deployments might better suit less complex workloads.

To manage costs effectively, consider auditing for unused endpoints, keeping endpoints within the same region to minimise data transfer fees, and optimising the number of endpoints per storage account. For deeper insights into cost-saving strategies, check out Azure Optimization Tips, Costs & Best Practices for expert advice on maintaining security without overspending.

Finally, let security guide your design choices. Use Azure Firewall for traffic inspection where necessary, but ensure your security requirements are clearly documented from the start to avoid expensive adjustments later on.

FAQs

How do Azure Private Endpoints improve security compared to public endpoints?

Azure Private Endpoints improve security by enabling resources to connect through a private, secure network, completely bypassing the public internet. This keeps data traffic confined to the Azure backbone network, significantly lowering the chances of unauthorised access or data breaches.

With private IP addresses at their core, Azure Private Endpoints block external threats and allow for tighter control over network access. They are especially useful in situations that demand strict security measures, such as hybrid cloud environments or secure application integrations.

What should I consider when deciding between single-region and multi-region deployments for Azure Private Endpoints?

When deciding between a single-region or multi-region deployment for Azure Private Endpoints, it's important to align your choice with your organisation's goals and operational needs.

A single-region deployment is straightforward and often more budget-friendly. It works well for businesses with operations concentrated in one area, as it minimises latency for users within the same geographic region. However, this setup comes with a drawback: your services could be impacted if there's a regional outage.

In contrast, a multi-region deployment offers better resilience and uptime by spreading resources across different Azure regions. This option is particularly suited for organisations with a global footprint or those running critical workloads that demand robust disaster recovery and high availability. That said, this approach can introduce added complexity and higher costs, so thorough planning is necessary to strike the right balance between performance, security, and spending.

How can organisations manage DNS configurations effectively in hybrid cloud environments with Azure Private Endpoints?

Azure Private Endpoints provide a way to securely connect to Azure services by assigning private IP addresses within your virtual network. To ensure smooth DNS management in hybrid cloud environments, it's crucial to integrate your on-premises DNS servers with Azure's private DNS zones. This setup allows private endpoints to be resolved seamlessly across both on-premises and Azure environments.

To keep traffic secure and avoid exposure to the public internet, set up conditional forwarding or DNS forwarding rules. These rules ensure that queries for Azure resources are directed through the private DNS zone. It's also a good idea to periodically review and adjust your DNS settings to keep pace with changes in your organisation's hybrid cloud infrastructure.